uv – The new Python Package Manager

A Developer’s Guide to Simplifying Environment Management

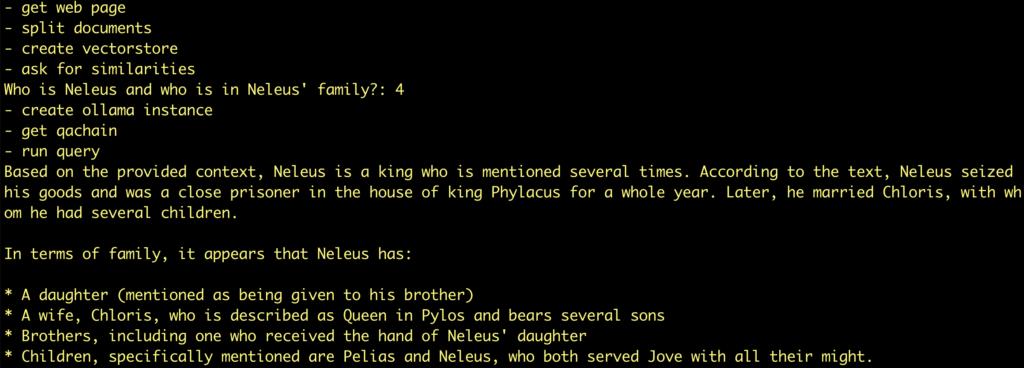

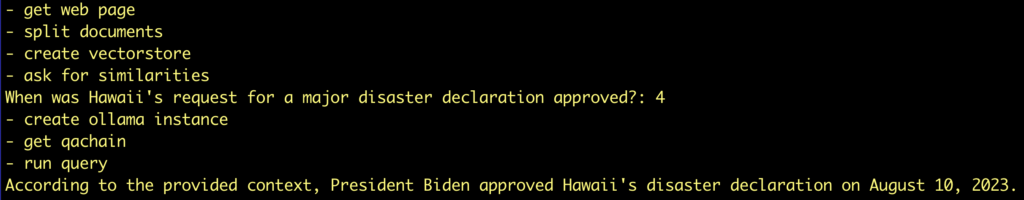

As developers, managing virtual environments is a crucial part of our workflow. With Python projects constantly shifting between dependencies and Python versions, using tools that streamline this process is key. Enter uv: a tool designed to simplify the creation, activation, and management of virtual environments and to manage python packages and projects.

In this post, I’ll introduce you to uv, walk you through its installation, and provide some tips to help you get started.

What is uv?

uv is an extremely fast Python package and project manager, written in Rust. It is a powerful tool that allows developers to manage Python virtual environments effortlessly. It provides functionality to create, activate, and switch between virtual environments in a standardized way.

By using uv, you can ensure that your virtual environments are consistently created and activated across different projects without the need to manually deal with multiple commands.

Why Use uv?

Managing Python projects often involves juggling various dependencies, versions, and configurations. Without proper tooling, this can become a headache. uv helps by:

- Standardizing virtual environments across projects, ensuring consistency.

- Simplifying project setup, requiring fewer manual steps to get your environment ready.

- Minimizing errors by automating activation and management of virtual environments.

Hint

In our examples, before each command you will see our shell prompt:

❯

Don’t type the ❯ when you enter the command. So, when seeing

❯ uv init

just type

uv init

In addition, when we activate the virtual environment, you will see a changed prompt:

✦ ❯

Installation and Setup

Getting started with uv is easy. Below are the steps for installing and setting up uv for your Python projects.

1. Install uv

With MacOS or Linux, you can install uv from the website:

❯ curl -LsSf https://astral.sh/uv/install.sh | sh

Alternatively, you can install uv using pip. You’ll need to have Python 3.8+ installed on your system.

❯ pip install uv

2. Create a New Virtual Environment

Once installed, you can use uv to create a virtual environment for your project. Simply navigate to your project directory and run:

❯ uv new

This command will create a new virtual environment inside the .venv folder within your project.

3. Activate the Virtual Environment

After creating the virtual environment, you can easily activate it using the following command:

uv activate

No need to worry about different activation scripts for Windows, Linux, or macOS. uv handles that for you.

4. Install Your Dependencies

Once the environment is active, you can install your project’s dependencies as you normally would:

❯ pip install -r requirements.txt

uv ensures that your dependencies are installed in the correct environment without any extra hassle.

You can also switch to a pyproject.toml file to manage your dependencies.

First you have to initialize the project:

❯ uv init

Then, add the dependency:

❯ uv add requests

Tips with virtual environments

When you create a virtual environment, the corresponding folder should be in your PATH.

Normally this is .venv/bin, when you create it with uv init. This path is added to your $PATH variable when you run uv activate.

But, if you want to choose a different folder, you must set the variable UV_PROJECT_ENVIRONMENT to this path:

❯ mkdir playground ❯ cd playground ❯ /usr/local/bin/python3.12 -m venv .venv/python/3.12 ❯ . .venv/python/3.12/bin/activate ✦ ❯ which python .../Playground/.venv/python/3.12/bin/python ✦ ❯ export UV_PROJECT_ENVIRONMENT=$PWD/.venv/python/3.12

✦ ❯ pip install uv Collecting uv Downloading uv-0.4.25-py3-none-macosx_10_12_x86_64.whl.metadata (11 kB) Downloading uv-0.4.25-py3-none-macosx_10_12_x86_64.whl (13.2 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 13.2/13.2 MB 16.5 MB/s eta 0:00:00 Installing collected packages: uv Successfully installed uv-0.4.25 ✦ ❯ which uv .../Playground/.venv/python/3.12/bin/uv

✦ ❯ uv init Initialized project `playground`

So, with the default settings, you will get an error because uv is searching the virtual environment in .venv.

✦ ❯ uv add requests warning: `VIRTUAL_ENV=.venv/python/3.12` does not match the project environment path `.../.venv/python/3.12` and will be ignored

Use the environment variable to tell uv where the virtual environment is installed.

✦ ❯ export UV_PROJECT_ENVIRONMENT=$PWD/.venv/python/3.12 ✦ ❯ uv add requests Resolved 6 packages in 0.42ms Installed 5 packages in 8ms + certifi==2024.8.30 + charset-normalizer==3.4.0 + idna==3.10 + requests==2.32.3 + urllib3==2.2.3

Tip

Use direnv to automatically set your environment:

- Install direnv: https://direnv.net/docs/installation.html

- Set

.envrcfile:

✦ ❯ . .venv/python/3.12/bin/activate ✦ ❯ export UV_PROJECT_ENVIRONMENT=$PWD/.venv/python/3.12

- Allow the .envrc file:

✦ ❯ direnv allow

Common uv Commands

Here are a few more useful uv commands to keep in mind:

- Deactivate the environment:

uv deactivate - Remove the environment:

uv remove - List available virtual environments in your project:

uv list

Tips for Using uv Effectively

- Consistent Environment Names: By default,

uvuses.venvas the folder name for virtual environments. Stick to this default to keep things consistent across your projects. - Integrate

uvinto your CI/CD pipeline: Ensure that your automated build tools use the same virtual environment setup by addinguvcommands to your pipeline scripts. - Use

uvin combination withpyproject.toml: If your project usespyproject.tomlfor dependency management,uvcan seamlessly integrate, ensuring your environment is always up to date. - Quick Switching: If you manage multiple Python projects,

uv‘s environment activation and deactivation commands make it easy to switch between projects without worrying about which virtual environment is currently active. - Automate Activation: Combine

uvwithdirenvor add an activation hook in your shell to automatically activate the correct environment when you enter a project folder.

Cheatsheet

uv Command Cheatsheet

General Commands

uv new | Creates a new virtual environment in the .venv directory. |

uv activate | Activates the virtual environment. |

uv deactivate | Deactivates the active virtual environment. |

uv remove | Removes the virtual environment in the project. |

uv list | Lists all available virtual environments in the project. |

uv install | Installs dependencies from requirements.txt or pyproject.toml. |

uv pip [pip-command] | Runs a pip command within the virtual environment. |

uv python [python-command] | Runs a Python command within the virtual environment. |

uv shell | Starts a new shell session with the virtual environment active. |

uv status | Shows the status of the current virtual environment. |

Working with Dependencies

uv pip install [package] | Installs a Python package in the active environment. |

uv pip uninstall [package] | Uninstalls a Python package from the environment. |

uv pip freeze | Outputs a list of installed packages and their versions. |

uv pip list | Lists all installed packages in the environment. |

uv pip show [package] | Shows details about a specific installed package. |

Environment Management

uv activate | Activates the virtual environment. |

uv deactivate | Deactivates the active environment. |

uv remove | Deletes the current virtual environment. |

uv list | Lists all virtual environments in the project. |

Cleanup and Miscellaneous

uv clean | Removes all .pyc and cache files from the project. |

uv upgrade | Upgrades uv itself to the latest version. |

Using Python and Pip Inside Virtual Environment

uv python | Runs Python within the virtual environment. |

uv pip [command] | Runs any pip command within the virtual environment. |

Helper Commands

uv status | Displays the current virtual environment status. |

uv help | Displays help about available commands. |

More to read

Here is a shot list of websites with documentation or other information about uv: