Developer Blog

Tipps und Tricks für Entwickler und IT-Interessierte

Vue – Cookbook

Responsive Design

React on Size Change

<script>

export default {

data() {

return {

isMobile: false,

isDesktop: false,

windowWidth: window.innerWidth,

windowHeight: window.innerHeight,

};

},

created() {

this.updateWindowSize();

window.addEventListener('resize', this.updateWindowSize);

},

methods: {

updateWindowSize() {

// console.log("updateWindowSize())");

this.windowWidth = window.innerWidth;

this.windowHeight = window.innerHeight;

this.checkIsMobile();

},

checkIsMobile() {

this.isMobile = this.windowWidth <= 768;

// console.log(`checkIsMobile(): windowWidth = ${this.windowWidth} isMobile=${this.isMobile}`)

},

beforeUnmount() {

console.log("beforeUnmount()");

window.removeEventListener('resize', this.updateWindowSize);

},

},

};

</script>Debugging

<script setup>

import {

onActivated,

onBeforeMount,

onBeforeUnmount,

onBeforeUpdate,

/* onCreated, */

onDeactivated,

onErrorCaptured,

onMounted,

/* onRenderTracked,*/

onRenderTriggered,

onScopeDispose,

onServerPrefetch,

onUnmounted,

onUpdated,

/* onWatcherCleanup, */

} from 'vue';

onActivated(() => { console.log('onActivated() called'); });

onBeforeMount(() => { console.log(`onBeforeMount():`) })

onBeforeUnmount(() => { console.log('onBeforeUnmount() called'); });

onBeforeUpdate(() => { console.log(`onBeforeUpdate():`) })

onDeactivated(() => { console.log('onDeactivated() called'); });

onErrorCaptured((err, instance, info) => { console.log('onErrorCaptured() called'); console.error(err); return false; });

onMounted(() => { console.log(`onMounted():`) })

onRenderTriggered((e) => { console.log('onRenderTriggered() called', e); });

onUnmounted(() => { console.log(`onUnmounted():`) })

onUpdated(() => { console.log('onUpdated() called'); });

onScopeDispose(() => { console.log('onScopeDispose() called'); });

onServerPrefetch(() => { console.log('onServerPrefetch() called'); });

</script>Learning | Hello World in different Languages

Python

print("Hello, World!")Java

public class HelloWorld {

public static void main(String[] args) {

System out println("Hello, World!");

}

}C

#include <stdio h>

int main() {

printf("Hello, World!\n");

return 0;

}4 C++:

#include <iostream>

int main() {

std::cout << "Hello, World!" << std::endl;

return 0;

}

JavaScript

console log("Hello, World!");Ruby

puts "Hello, World!"

Swift

print("Hello, World!")Go

package main

import "fmt"

func main() {

fmt Println("Hello, World!")

}Rust

fn main() {

println!("Hello, World!");

}PHP

<?php echo "Hello, World!"; ?>

Perl

print "Hello, World!\n";

Kotlin

fun main() {

println("Hello, World!")

}Scala

object HelloWorld {

def main(args: Array[String]): Unit = {

println("Hello, World!")

}

}Lua

print("Hello, World!")Haskell

main :: IO () main = putStrLn "Hello, World!"

Dart

void main() {

print('Hello, World!');

}Shell

echo "Hello, World!"

Batch

@echo off echo Hello, World!

PowerShell

Write-Output "Hello, World!"

VBScript

MsgBox "Hello, World!"

Objective-C

#import <Foundation/Foundation h>

int main(int argc, const char * argv[]) {

@autoreleasepool {

NSLog(@"Hello, World!");

}

return 0;

}Assembly

section data

hello db 'Hello, World!',10

len equ $ - hello

section text

global _start

_start:

; write our string to stdout

mov eax, 4 ; sys_write

mov ebx, 1 ; file descriptor 1 (stdout)

mov ecx, hello ; message to write

mov edx, len ; message length

int 0x80 ; syscall

; exit

mov eax, 1 ; sys_exit

xor ebx, ebx ; exit status 0

int 0x80 ; syscall

VBA (Visual Basic for Applications)

Sub HelloWorld()

MsgBox "Hello, World!"

End SubTcl

puts "Hello, World!"

COBOL

IDENTIFICATION DIVISION

PROGRAM-ID HELLO-WORLD

PROCEDURE DIVISION

DISPLAY "Hello, World!"

STOP RUN 26 F#:

printfn "Hello, World!"

Elixir

IO puts "Hello, World!"

SQL (MySQL)

SELECT 'Hello, World!';

SQL (SQLite)

SELECT 'Hello, World!';

SQL (PostgreSQL)

SELECT 'Hello, World!';

SQL (Oracle)

SELECT 'Hello, World!' FROM DUAL;

SQL (SQL Server)

PRINT 'Hello, World!';

Smalltalk

Transcript show: 'Hello, World!'; cr

R

cat("Hello, World!\n")Bash

echo "Hello, World!"

Erlang

-module(hello)

-export([hello_world/0])

hello_world() ->

io:fwrite("Hello, World!~n") Julia

println("Hello, World!")MATLAB

disp('Hello, World!');AutoHotkey

MsgBox, Hello, World!

Clojure

(println "Hello, World!")

Groovy

println "Hello, World!"

OCaml

print_endline "Hello, World!"

D

import std stdio;

void main()

{

writeln("Hello, World!");

}Crystal

puts "Hello, World!"

Nim

echo "Hello, World!"

Common Lisp

(format t "Hello, World!~Scheme

(display "Hello, World!") (newline)Prolog

:- initialization(main) main :- write('Hello, World!'), nl, haltABAP

REPORT ZHELLO_WORLD WRITE: / 'Hello, World!'VB NET

vb net Module HelloWorld Sub Main() Console WriteLine("Hello, World!") End Sub End Module

AI Environment | Writing Apps for OpenAI, ChatGPT, Ollama and others

Python UI Frameworks

- Gradio

Gradio is the fastest way to demo your machine learning model with a friendly web interface so that anyone can use it, anywhere! - Streamlit

Streamlit turns data scripts into shareable web apps in minutes.

All in pure Python. No front‑end experience required. - HyperDiv

Open-source framework for rapidly building reactive web apps in Python, with built-in Shoelace components, Markdown, charts, tables, and more. - Shoelace

A forward-thinking library of web components.

Working with local LLMs

List of Frameworks and Tools

Ollama

curl -fsSL https://ollama.com/install.sh | sh

ollama run llama2

LLM

pip install llm llm install llm-gpt4all llm -m the-model-name "Your query"

llm aliases set falcon ggml-model-gpt4all-falcon-q4_0 llm -m ggml-model-gpt4all-falcon-q4_0 "Tell me a joke about computer programming"

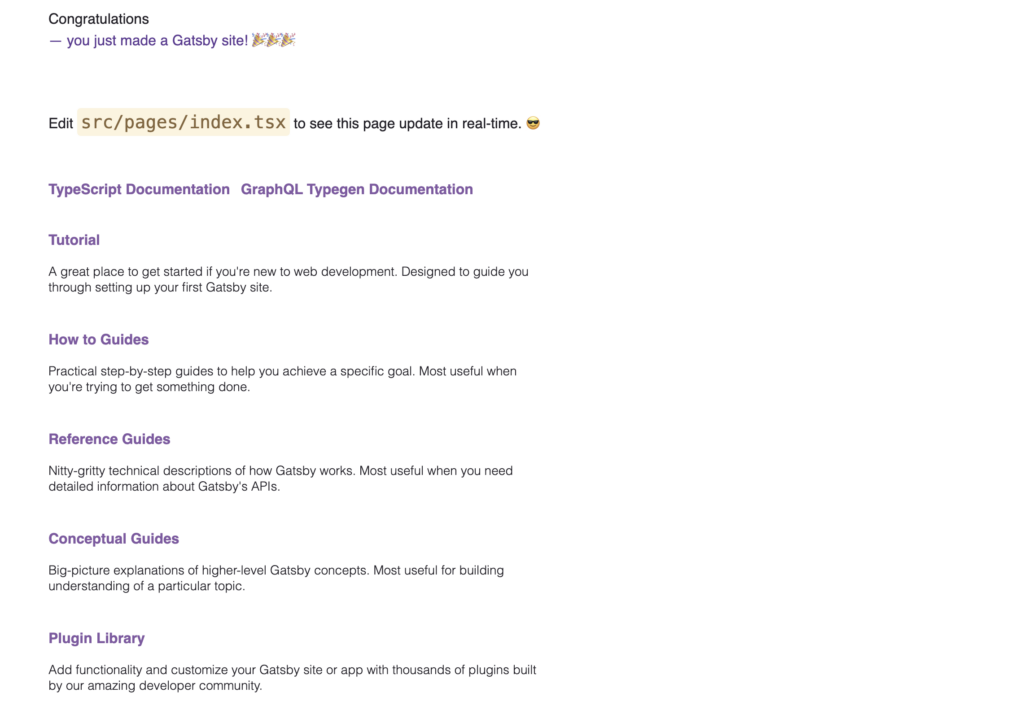

Gatsby | Getting started with Gatsby

Installation

npm init gatsby

pnpm create gatsby

❯ npm create gatsby

.../21.6.2/pnpm/store/v3/tmp/dlx-42253 | +3 +

.../21.6.2/pnpm/store/v3/tmp/dlx-42253 | Progress: resolved 3, reused 1, downloaded 2, added 3, done

create-gatsby version 3.13.1

Welcome to Gatsby!

What would you like to call your site?

✔ Getting-Started-with-Gatsby/ site

✔ Will you be using JavaScript or TypeScript?

· TypeScript

✔ Will you be using a CMS?

· No (or I'll add it later)

✔ Would you like to install a styling system?

· Tailwind CSS

✔ Would you like to install additional features with other plugins?

· Add responsive images

· Add an automatic sitemap

· Generate a manifest file

· Add Markdown and MDX support

Thanks! Here's what we'll now do:

🛠 Create a new Gatsby site in the folder site

🎨 Get you set up to use Tailwind CSS for styling your site

🔌 Install gatsby-plugin-image, gatsby-plugin-sitemap, gatsby-plugin-manifest, gatsby-plugin-mdx

✔ Shall we do this? (Y/n) · Yes

✔ Created site from template

✔ Installed Gatsby

✔ Installed plugins

✔ Created site in site

🔌 Setting-up plugins...

info Adding gatsby-plugin-postcss

info Adding gatsby-plugin-image

info Adding gatsby-plugin-sitemap

info Adding gatsby-plugin-manifest

info Adding gatsby-plugin-mdx

info Adding gatsby-plugin-sharp

info Adding gatsby-transformer-sharp

info Adding gatsby-source-filesystem

info Adding gatsby-source-filesystem

info Installed gatsby-plugin-postcss in gatsby-config

success Adding gatsby-plugin-postcss to gatsby-config - 0.224s

info Installed gatsby-plugin-image in gatsby-config

success Adding gatsby-plugin-image to gatsby-config - 0.221s

info Installed gatsby-plugin-sitemap in gatsby-config

success Adding gatsby-plugin-sitemap to gatsby-config - 0.230s

info Installed gatsby-plugin-manifest in gatsby-config

success Adding gatsby-plugin-manifest to gatsby-config - 0.258s

info Installed gatsby-plugin-mdx in gatsby-config

success Adding gatsby-plugin-mdx to gatsby-config - 0.264s

info Installed gatsby-plugin-sharp in gatsby-config

success Adding gatsby-plugin-sharp to gatsby-config - 0.265s

info Installed gatsby-transformer-sharp in gatsby-config

success Adding gatsby-transformer-sharp to gatsby-config - 0.269s

info Installed gatsby-source-filesystem in gatsby-config

success Adding gatsby-source-filesystem (images) to gatsby-config - 0.279s

info Installed gatsby-source-filesystem in gatsby-config

success Adding gatsby-source-filesystem (pages) to gatsby-config - 0.286s

🎨 Adding necessary styling files...

🎉 Your new Gatsby site Getting Started with Gatsby has been successfully created

at /Users/Shared/CLOUD/Programmier-Workshops/Kurse/Gatsby/Einsteiger/Getting-Started-with-Gatsby/site.

Start by going to the directory with

cd site

Start the local development server with

npm run develop

See all commands at

https://www.gatsbyjs.com/docs/reference/gatsby-cli/

❯ cd site ❯ npm install ❯ npm run develop

PHP | Ökosystem

PHPStan

Finds bugs in your code without writing tests. It’s open-source and free.

PHPMD: PHP Mess Detector

What PHPMD does is: It takes a given PHP source code base and look for several potential problems within that source. These problems can be things like:

- Possible bugs

- Suboptimal code

- Overcomplicated expressions

- Unused parameters, methods, properties

PHPMD is a mature project and provides a diverse set of pre defined rules (though may be not as many its Java brother PMD) to detect code smells and possible errors within the analyzed source code. Checkout the rules section to learn more about all implemented rules.

PHP CodeSniffer

PHP_CodeSniffer tokenizes PHP files and detects violations of a defined set of coding standards.

GrumPHP

Sick and tired of defending code quality over and over again? GrumPHP will do it for you! This composer plugin will register some git hooks in your package repository. When somebody commits changes, GrumPHP will run some tests on the committed code. If the tests fail, you won’t be able to commit your changes. This handy tool will not only improve your codebase, it will also teach your co-workers to write better code following the best practices you’ve determined as a team.

https://api-platform.com

Laravel | Arbeiten mit Swagger

Schritt 1: Erstellen Sie ein Laravel-Projekt

Wenn Sie noch kein Laravel-Projekt haben, erstellen Sie eines mit Composer. Öffnen Sie Ihr Terminal und führen Sie den folgenden Befehl aus:

composer create-project --prefer-dist laravel/laravel App

Ersetzen Sie your-api-project durch den gewünschten Projektnamen.

Schritt 2: Installieren Sie die Swagger-PHP-Bibliothek

Sie benötigen die Swagger-PHP-Bibliothek, um Swagger-Dokumentation zu generieren. Installieren Sie diese mit Composer:

composer require zircote/swagger-php

Schritt 3: Erstellen Sie API-Routen

In Laravel definieren Sie Ihre API-Routen in der Datei routes/api.php. Sie können Routen erstellen, wie Sie es normalerweise für Ihre API tun würden.

routes/api.php

use Illuminate\Support\Facades\Route;

Route::get('/users', 'UserController@index');

Route::post('/users', 'UserController@store');

Route::get('/users/{id}', 'UserController@show');Schritt 4: Generieren Sie Swagger-Annotationen

In Ihren Controller-Methoden verwenden Sie Swagger-Annotationen, um Ihre API zu dokumentieren. Hier ist ein Beispiel, wie man eine Controller-Methode annotiert:

/**

* @SWG\Get(

* path="/users",

* summary="Holt eine Liste von Benutzern",

* tags={"Users"},

* @SWG\Response(response=200, description="Erfolgreiche Operation"),

* @SWG\Response(response=400, description="Ungültige Anfrage")

* )

*/

public function index()

{

// Ihre API-Logik hier

}Weitere Informationen zu Swagger-Annotationen finden Sie in der Swagger-PHP-Dokumentation.

Schritt 5: Generieren Sie Swagger-Dokumentation

Nachdem Sie Ihre Controller annotiert haben, müssen Sie die Swagger-Dokumentation generieren. Dies können Sie mit dem artisan Befehl tun, der vom darkaonline/l5-swagger Paket bereitgestellt wird.

Zuerst installieren Sie das Paket:

composer require darkaonline/l5-swagger

Veröffentlichen Sie nun die Swagger-Konfiguration:

php artisan vendor:publish --provider "L5Swagger\L5SwaggerServiceProvider"

Bearbeiten Sie die Datei config/l5-swagger.php, um die Swagger-Konfiguration nach Bedarf anzupassen.

Schritt 6: Generieren Sie Swagger-Dokumentation

Führen Sie den folgenden Befehl aus, um die Swagger-Dokumentation zu generieren:

php artisan l5-swagger:generate

Die Swagger UI ist verfügbar unter http://127.0.0.1:8000/api/documentation.

Schritt 7: Zugriff auf Swagger UI

Öffnen sie die Swagger UI URL in Ihrem Browser.

Astro Tutorial – Add Table of Content (TOC) to the Post Layout

TL;DR

Code for this tutorial is on Github

Starter Project

Create Starter

❯ npm create astro@latest

.../21.6.2/pnpm/store/v3/tmp/dlx-5990 | +39 ++++

.../21.6.2/pnpm/store/v3/tmp/dlx-5990 | Progress: resolved 39, reused 39, downloaded 0, added 39, done

astro Launch sequence initiated.

dir Where should we create your new project?

./Tutorial - Add TOC to PostLayout

tmpl How would you like to start your new project?

Use blog template

ts Do you plan to write TypeScript?

Yes

use How strict should TypeScript be?

Strict

deps Install dependencies?

Yes

git Initialize a new git repository?

Yes

✔ Project initialized!

■ Template copied

■ TypeScript customized

■ Dependencies installed

■ Git initialized

next Liftoff confirmed. Explore your project!

Enter your project directory using cd "./Tutorial - Add TOC to PostLayout"

Run pnpm dev to start the dev server. CTRL+C to stop.

Add frameworks like react or tailwind using astro add.

Stuck? Join us at https://astro.build/chat

╭─────╮ Houston:

│ ◠ ◡ ◠ Good luck out there, astronaut! 🚀

╰─────╯Run Starter

❯ cd Tutorial\ -\ Add\ TOC\ to\ PostLayout/ Tutorial - Add TOC to PostLayout on master [+] is 📦 v0.0.1 via v21.6.2 via 🐍 v3.12.2 (3.12) ❯ npm run dev > tutorial---add-toc-to-postlayout@0.0.1 dev /Users/Shared/CLOUD/Programmier-Workshops/Kurse/Astro/Einsteiger/Tutorial - Add TOC to PostLayout > astro dev astro v4.5.12 ready in 300 ms ┃ Local http://localhost:4321/ ┃ Network use --host to expose 12:18:45 watching for file changes...

Modify Project Structur and add helper code

tsconfig.json

{

"extends": "astro/tsconfigs/strict",

"compilerOptions": {

"allowJs": true,

"strictNullChecks": true,

"baseUrl": ".",

"lib": ["es2022", "dom", "dom.iterable"],

"paths": {

"@/*": ["src/*"]

}

},

"exclude": ["node_modules", "**/node_modules/*", ".vscode", "dist"]

}

Replace relative path with reference to path alias in every page

import Layout from '../../layouts/BlogPost.astro';

becomes to

import Layout from '@/layouts/BlogPost.astro';

import Layout from '../styles/global.css';

becomes to

import Layout from '@/styles/global.css';

Create separate Layouts for Project and Posts

Rename src/layouts/BlogPost.astro to src/layouts/BaseLayout.astro

Change every import of BlogPost to

import Layout from '@/layouts/BaseLayout.astro';

The Art of AI: Create / Recreating Images

Today, i want to find out, how to create / recreating images with ChatGPT.

Challenge: I found an image and want to recreate the image:

Write a Prompt that creates this Image

Create an image of a woman with long, sleek black hair and striking features. She should have a strong, confident posture with her arms crossed. She's wearing a simple, elegant black dress with a round neckline. The background is a modern, minimalist office with a hint of sophistication. The color palette should be neutral, with emphasis on shades of grey and black to match her attire. The lighting is soft and diffused, highlighting her from the front and casting a gentle shadow behind her. The overall aesthetic is chic and polished with a touch of corporate professionalism.

Write a Prompt that creates this Image

Create an image of a poised and professional woman with long, sleek black hair and prominent, striking features. She stands with a sense of authority, her arms confidently crossed in front of her. She is dressed in a simple yet sophisticated black dress with a modest round neckline. The setting is a contemporary, minimalist office space that exudes a refined elegance. The color scheme is a harmonious blend of neutral tones, dominated by greys and blacks that complement her attire. Soft, ambient lighting bathes the scene, casting a gentle illumination that accentuates her silhouette against the muted sophistication of the office backdrop. The composition should convey an atmosphere of chic professionalism and understated luxury.

Write a Prompt that creates this Image

Create an image of an elegant and professional woman with long, black hair and defined, striking features. She exhibits a posture of confidence and composure with her arms crossed. Her attire is a chic, form-fitting black dress with a modest neckline. The environment is a stylish, minimalist office setting with sophisticated furnishings and a neutral color scheme emphasizing grays and blacks. The scene is illuminated with soft, ambient lighting that casts a gentle glow on the woman, highlighting her assertive stance against the contemporary backdrop, evoking a sense of refined grace and corporate elegance.

Notes:

Mostly, after running the same prompt the second time, ChatGPT responded with something like that:

So, i ask ChatGPT nicely to do it again:

Create the image again, because each time the image looks different

Next Step: find the precise prompt

As we see, using the same prompt results in different images. So i asked ChatGPT how to create a prompt that produces the same image each time:

| Me | Using one prompt multiple times, each image is different from another. How can a state the prompt more clearly so that the prompt creates each time exactly the same imageChatGPT |

| ChatGPT | Creating exactly the same image multiple times using a text-to-image AI model like DALL-E is challenging due to the inherent variability in how these models interpret and render prompts. Each time a prompt is processed, the model generates a unique interpretation based on its training and algorithms, which can lead to variations in the resulting images. However, there are some strategies you can use to minimize these differences: Be Highly Specific: Provide as many details as possible in your prompt, including specifics about posture, facial expression, clothing, and background elements. The more detailed your description, the less room there is for the AI to interpret differently. Use Precise Descriptors: Instead of using general terms, use precise descriptors. For example, instead of saying “long, black hair,” specify the style, such as “long, straight, black hair that falls to mid-back.” Define the Scene Layout: Be explicit about the composition of the image, like where the subject is positioned in the frame, how much of the background is visible, and the perspective or angle of the view. Describe Colors and Lighting: Clearly state the colors you want to be used, including shades and tones, and describe the lighting (e.g., “soft, diffused lighting from the front left”). Include Comparable Examples: If possible, reference a similar existing image or style to guide the AI’s interpretation. Consistency in Format: If you’re looking for a portrait, landscape, or specific aspect ratio, mention this explicitly. Even with these strategies, it’s important to remember that AI models like DALL-E are probabilistic and may still produce variations with each rendering. The nature of these models means they interpret prompts creatively, so exact replication of images is not always possible. |

Ok, lets try:

| Me |  Using your suggestions, create a precise prompt that create this image. Use all 6 strategies in your prompt |

| ChatGPT | Create a digital portrait of a woman who embodies elegance and professionalism. She should have long, straight, jet-black hair with a glossy sheen, cascading down to mid-back. Her facial features are sharply defined, with high cheekbones, almond-shaped hazel eyes, meticulously groomed eyebrows, and full, neutral-colored lips. Her skin has a matte finish with a porcelain-like quality. Her stance is assertive; she stands straight with her shoulders back and arms crossed at the waist, showcasing a silver bracelet on her right wrist and a matching ring on her left middle finger. She wears a tailored, form-fitting black sheath dress with three-quarter sleeves and a boat neckline, ending just above the knees. The setting is an upscale, minimalist office: to her right is a steel-gray filing cabinet with a potted green succulent on top; to her left, a sleek, black office chair behind a dark walnut desk, on which rests a closed silver laptop and a white ceramic mug. The background comprises floor-to-ceiling windows with thin, vertical blinds casting soft shadows, and the walls are a light gray. The lighting is soft and directional, coming from the top left, creating a subtle highlight on her left cheek and a soft shadow to her right, enhancing her features without harshness. The overall color palette is monochrome, with a focus on blacks, whites, and grays, providing contrast against her black dress and the lighter office environment. The composition is centered and balanced, with the woman placed in the middle of the frame, allowing for an equal amount of office space on either side of her. The camera angle is at eye level, ensuring a direct and engaging connection with the viewer. The image should convey a narrative of confidence, sophistication, and modern professional elegance. |

Wow, impresiv. Ok, here are the image created by this prompt:

The results looks more the same, but still lacking these “unique” factor.

So i asked ChatGPT again:

| Me | The images still looks different. Is there a deterministic way to uniquely generate an imageChatGPT |

| ChatGPT | AI image generation models like DALL-E are designed to introduce variation and creativity into their outputs. Even with highly detailed prompts, they are not deterministic in the traditional sense — you won’t get the exact same image with each generation because the model incorporates an element of randomness or “seed” into each creation process. This randomness is a core feature, as it enables the generation of unique images from the same prompt. However, in some advanced implementations of these models, you can specify a “seed” value, which can help in producing the same image across multiple runs if the seed is kept constant. The “seed” is essentially a starting point for the random number generator used in the generation process. By using the same seed, you tell the AI to start from the same random point, leading to the same sequence of “random” numbers, which can result in the same output. At the moment, the system you are interacting with does not provide an option to control the seed value manually for each request. Each new image generation is treated as a fresh instance, without a way to replicate the exact randomness of a previous run. Therefore, while you can guide the AI with very specific and detailed prompts to achieve close approximations, there is currently no way to ensure that a publicly available AI image generation model like DALL-E will produce the exact same image twice. |

Ok, so we wont get 100

Me

Could you suggest the general structure of an image creating prompt, so that this prompt will create nearly the same images, each time i run the pr

To achieve the most consistent results with an AI image generation model like DALL-E, you should craft a prompt that is detailed and specific, reducing the room for interpretation as much as possible. Here’s a general structure you can follow:

- Subject Description: Start with a detailed description of the main subject, including physical characteristics, expressions, and attire.

- Example: “Create an image of a woman in her 30s with shoulder-length curly brown hair, green eyes, wearing a navy blue blazer and white shirt.”

- Posture and Expression: Clearly describe the subject’s posture, facial expression, and any gestures.

- Example: “She has a friendly smile, direct eye contact with the viewer, and her hands are folded in her lap.”

- Setting and Background: Detail the setting, including specific objects, colors, and the overall atmosphere.

- Example: “She is seated on a red armchair in a well-lit room with bookshelves filled with colorful books in the background.”

- Lighting and Shadows: Describe the lighting direction and intensity, as well as the resulting shadows.

- Example: “There is soft lighting coming from the left, creating a gentle shadow on the right side of her face.”

- Color Palette: Specify the colors you want to be dominant in the image.

- Example: “The color palette is warm, with rich browns and creams, accentuating the wooden elements of the furniture and books.”

- Camera Angle and Composition: Mention the perspective of the image, such as the angle and framing.

- Example: “The viewpoint is at eye level, capturing the upper body of the woman, centered in the frame with equal space on both sides.”

- Additional Details: Add any other specific details that you want to include.

- Example: “She wears a silver pendant necklace and has a small mole on her right cheek.”

Here’s how it would look put together:

“Create an image of a woman in her 30s with shoulder-length curly brown hair and green eyes, smiling friendly at the viewer, seated on a red armchair with hands folded in her lap. She is wearing a navy blue blazer and white shirt. The room is well-lit, with soft lighting coming from the left creating a gentle shadow on the right side of her face. The background is filled with bookshelves with colorful books. The color palette is warm, with rich browns and creams. The viewpoint is eye level, framing the woman in the center with equal space on both sides. She wears a silver pendant necklace and has a small mole on her right cheek.”

Even with this structure, slight variations can occur due to the stochastic nature of AI image generation. However, providing a highly structured and detailed prompt like the one above can significantly increase the consistency of the images produced.

Ok, let’s try i out

Me

Create an image of a woman in her 30s with shoulder-length curly brown hair and green eyes, smiling friendly at the viewer, seated on a red armchair with hands folded in her lap. She is wearing a navy blue blazer and white shirt. The room is well-lit, with soft lighting coming from the left creating a gentle shadow on the right side of her face. The background is filled with bookshelves with colorful books. The color palette is warm, with rich browns and creams. The viewpoint is eye level, framing the woman in the center with equal space on both sides. She wears a silver pendant necklace and has a small mole on her right cheek.