Docker | Create an extensible build environment

Inhaltsverzeichnis

TL;DR

The complete code for the post is here.

General Information

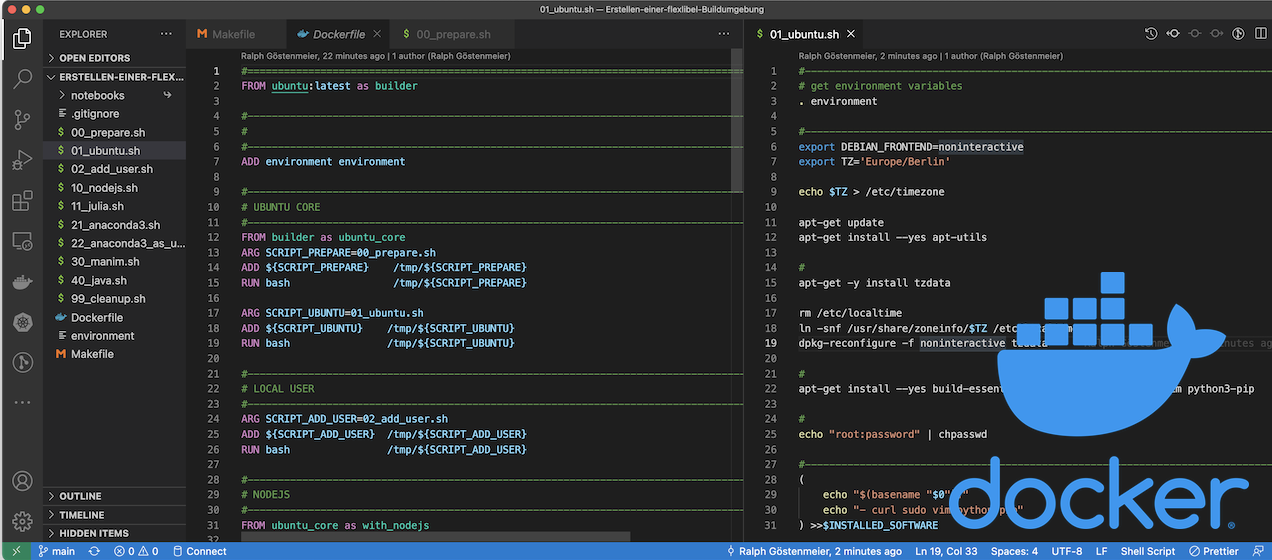

Building a Docker image mainly means creating a Dockefile and specifying all the required components to install and configure.

So a Dockerfile contains at least many operating system commands for installing and configuring software and packages.

Keeping all commands in one file (Dockerfile) can lead to a confusing structure.

In this post I describe and explain an extensible structure for a Docker project so that you can reuse components for other components.

General Structure

The basic idea behind the structure is: split all installation instructions into separate installation scripts and call them individually in the dockerfile.

In the end the structure will look something like this (with some additional collaborators to be added and described later)

RUN bash /tmp/${SCRIPT_UBUNTU}

RUN bash /tmp/${SCRIPT_ADD_USER}

RUN bash /tmp/${SCRIPT_NODEJS}

RUN bash /tmp/${SCRIPT_JULIA}

RUN bash /tmp/${SCRIPT_ANACONDA}

RUN cat ${SCRIPT_ANACONDA_USER} | su user

RUN bash /tmp/${SCRIPT_CLEANUP}For example, preparing the Ubuntu image by installing basic commands is transfered into the script 01_ubuntu_sh

Hint: There are hardly any restrictions on the choice of names (variables and files/scripts). For the scripts, I use numbering to express order.

The script contains this code:

apt-get update apt-get install --yes apt-utils apt-get install --yes build-essential lsb-release curl sudo vim python3-pip echo "root:root" | chpasswd

Since we will be working with several scripts, an exchange of information is necessary. For example, one script installs a package and the other needs the installation folder.

We will therefore store information needed by multiple scripts in a separate file: the environment file environment

#--------------------------------------------------------------------------------------

USR_NAME=user

USR_HOME=/home/user

GRP_NAME=work

#--------------------------------------------------------------------------------------

ANACONDA=anaconda3

ANACONDA_HOME=/opt/$ANACONDA

ANACONDA_INSTALLER=/tmp/installer_${ANACONDA}.sh

JULIA=julia

JULIA_HOME=/opt/${JULIA}-1.7.2

JULIA_INSTALLER=/tmp/installer_${JULIA}.tgzAnd each installation script must start with a preamble to use this environment file:

#-------------------------------------------------------------------------------------- # get environment variables . environment

Preparing Dockerfile and Build Environment

When building an image, Docker needs all files and scripts to run inside the image. Since we created the installation scripts outside of the image, we need to copy them into the image (run run them). This also applies to the environment file environment.

File copying is done by the Docker ADD statement.

First we need our environment file in the image, so let’s copy this:

#====================================================================================== FROM ubuntu:latest as builder #-------------------------------------------------------------------------------------- ADD environment environment

Each block to install one required software looks like this. To be flexible, we use variable for the script names.

ARG SCRIPT_UBUNTU=01_ubuntu.sh

ADD ${SCRIPT_UBUNTU} /tmp/${SCRIPT_UBUNTU}

RUN bash tmp/${SCRIPT_UBUNTU}Note: We can’t run the script directly because the run bit may not be set. So we will use bash to run the text file as a script.

As an add-on, we will be using Docker’s multi-stage builds. So here is the final code:

#--------------------------------------------------------------------------------------

# UBUNTU CORE

#--------------------------------------------------------------------------------------

FROM builder as ubuntu_core

ARG SCRIPT_UBUNTU=01_ubuntu.sh

ADD ${SCRIPT_UBUNTU} /tmp/${SCRIPT_UBUNTU}

RUN bash Final results

Dockerfile

Here is the final Dockerfile:

#==========================================================================================

FROM ubuntu:latest as builder

#------------------------------------------------------------------------------------------

#

#------------------------------------------------------------------------------------------

ADD environment environment

#------------------------------------------------------------------------------------------

# UBUNTU CORE

#------------------------------------------------------------------------------------------

FROM builder as ubuntu_core

ARG SCRIPT_UBUNTU=01_ubuntu.sh

ADD ${SCRIPT_UBUNTU} /tmp/${SCRIPT_UBUNTU}

RUN bash /tmp/${SCRIPT_UBUNTU}

#------------------------------------------------------------------------------------------

# LOCAL USER

#------------------------------------------------------------------------------------------

ARG SCRIPT_ADD_USER=02_add_user.sh

ADD ${SCRIPT_ADD_USER} /tmp/${SCRIPT_ADD_USER}

RUN bash /tmp/${SCRIPT_ADD_USER}

#------------------------------------------------------------------------------------------

# NODEJS

#-----------------------------------------------------------------------------------------

FROM ubuntu_core as with_nodejs

ARG SCRIPT_NODEJS=10_nodejs.sh

ADD ${SCRIPT_NODEJS} /tmp/${SCRIPT_NODEJS}

RUN bash /tmp/${SCRIPT_NODEJS}

#--------------------------------------------------------------------------------------------------

# JULIA

#--------------------------------------------------------------------------------------------------

FROM with_nodejs as with_julia

ARG SCRIPT_JULIA=11_julia.sh

ADD ${SCRIPT_JULIA} /tmp/${SCRIPT_JULIA}

RUN bash /tmp/${SCRIPT_JULIA}

#---------------------------------------------------------------------------------------------

# ANACONDA3 with Julia Extensions

#---------------------------------------------------------------------------------------------

FROM with_julia as with_anaconda

ARG SCRIPT_ANACONDA=21_anaconda3.sh

ADD ${SCRIPT_ANACONDA} /tmp/${SCRIPT_ANACONDA}

RUN bash /tmp/${SCRIPT_ANACONDA}

#---------------------------------------------------------------------------------------------

#

#---------------------------------------------------------------------------------------------

FROM with_anaconda as with_anaconda_user

ARG SCRIPT_ANACONDA_USER=22_anaconda3_as_user.sh

ADD ${SCRIPT_ANACONDA_USER} /tmp/${SCRIPT_ANACONDA_USER}

#RUN cat ${SCRIPT_ANACONDA_USER} | su user

#---------------------------------------------------------------------------------------------

#

#---------------------------------------------------------------------------------------------

FROM with_anaconda_user as with_cleanup

ARG SCRIPT_CLEANUP=99_cleanup.sh

ADD ${SCRIPT_CLEANUP} /tmp/${SCRIPT_CLEANUP}

RUN bash /tmp/${SCRIPT_CLEANUP}

#=============================================================================================

USER user

WORKDIR /home/user

#

CMD ["bash"]

Makefile

HERE := ${CURDIR}

CONTAINER := playground_docker

default:

cat Makefile

build:

docker build -t ${CONTAINER} .

clean:

docker_rmi_all

run:

docker run -it --rm -p 127.0.0.1:8888:8888 -v ${HERE}:/src:rw -v ${HERE}/notebooks:/notebooks:rw --name ${CONTAINER} ${CONTAINER}

notebook:

docker run -it --rm -p 127.0.0.1:8888:8888 -v ${HERE}:/src:rw -v ${HERE}/notebooks:/notebooks:rw --name ${CONTAINER} ${CONTAINER} bash .local/bin/run_jupyterInstallation scripts

01_ubuntu.sh

#-------------------------------------------------------------------------------------------------- # get environment variables . environment #-------------------------------------------------------------------------------------------------- export DEBIAN_FRONTEND=noninteractive export TZ='Europe/Berlin' echo $TZ > /etc/timezone apt-get update apt-get install --yes apt-utils # apt-get -y install tzdata rm /etc/localtime ln -snf /usr/share/zoneinfo/$TZ /etc/localtime dpkg-reconfigure -f noninteractive tzdata # apt-get install --yes build-essential lsb-release curl sudo vim python3-pip # echo "root:password" | chpasswd