Ollama | Getting Started

Inhaltsverzeichnis

Installation

Read here for details.

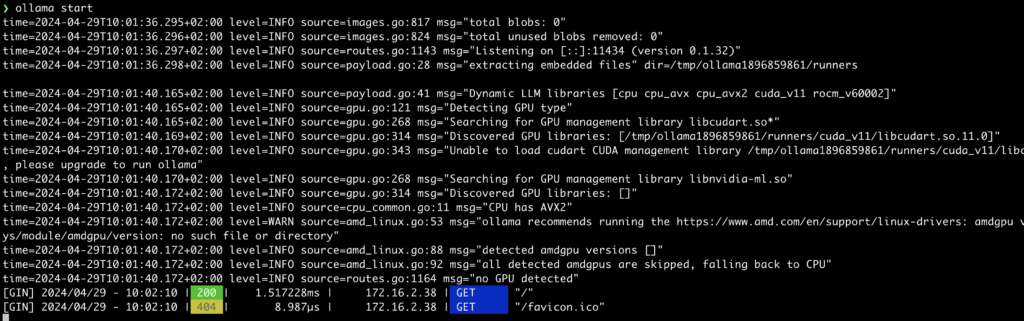

Start Ollama Service

ollama start

This starts the Ollama Service and binds it to the default ip address 127.0.0.1:11434

If you want to access the service from another host/client, you have to use the ip address 0.0.0.0

systemctl stop ollama.service export OLLAMA_HOST=0.0.0.0:11434 ollama start

To change the IP address of the service, edit the file /etc/systemd/system/ollama.service and add

[Unit] Description=Ollama Service After=network-online.target [Service] ExecStart=/usr/local/bin/ollama serve User=ollama Group=ollama Restart=always RestartSec=3 Environment="OLLAMA_HOST=0.0.0.0:11434" [Install] WantedBy=default.target

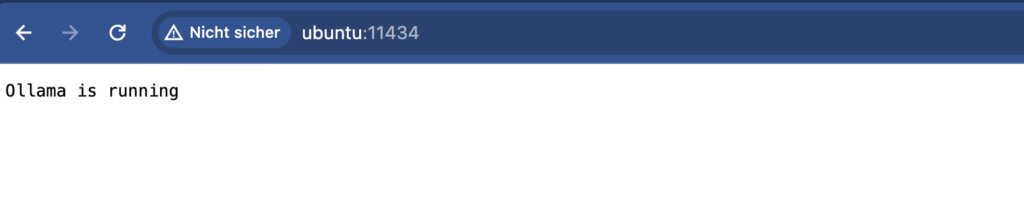

Access the Ollama Service from your Browser using <ip-adress of host>:11434

Sample Python Chat

Install Python

Install Ollama Library

pip install ollama

Create the chat programm

#!/usr/bin/env python

from ollama import Client

ollama = Client(host='127.0.0.1')

response = ollama.chat(model='llama3', messages=[

{

'role': 'user',

'content': 'Why is the sky blue?',

},

])

print(response['message']['content'])Run the programm

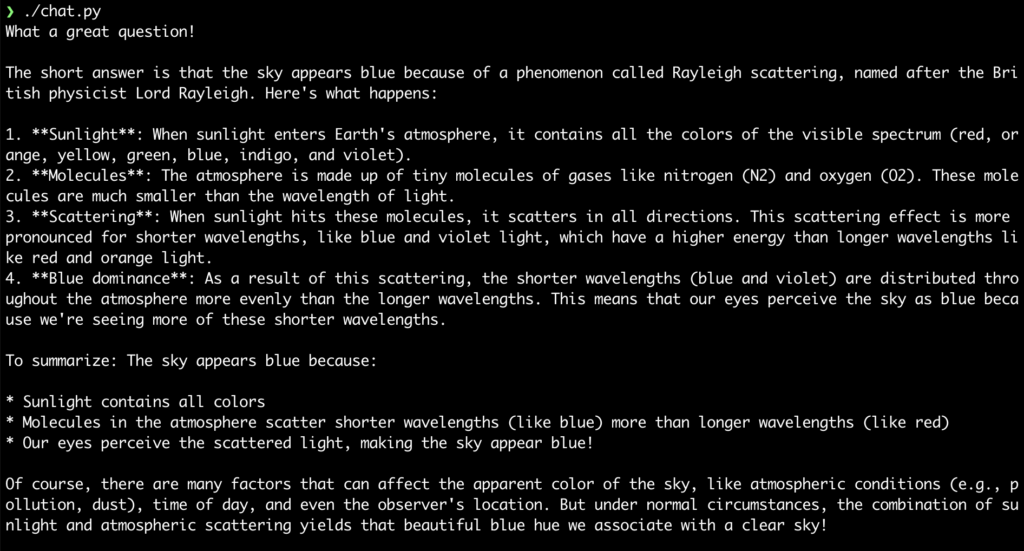

❯ ./chat.py

Hint: To see, whats happen with the service, monitor the logfile

❯ sudo journalctl -u ollama.service -f

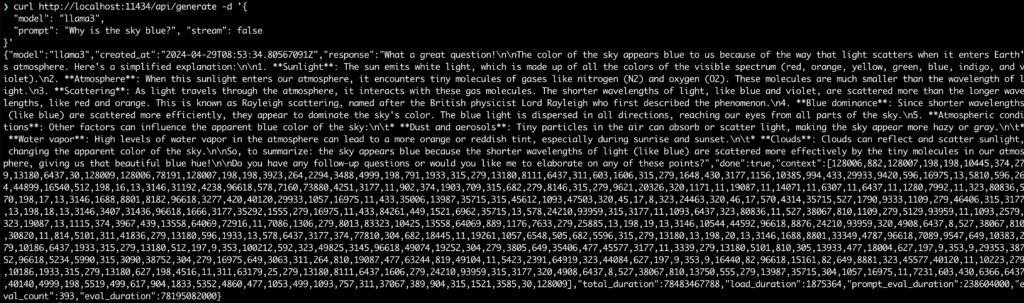

Query the API

Read the API definition here

curl http://localhost:11434/api/generate -d '{

"model": "llama2",

"prompt": "Why is the sky blue?",

"stream": false

}'

Leave a Reply